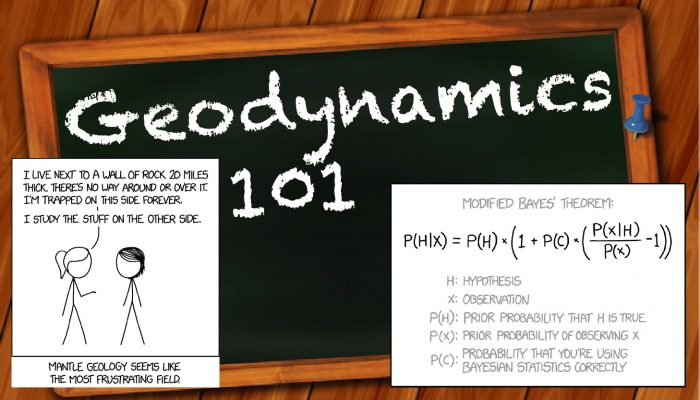

The Geodynamics 101 series serves to showcase the diversity of research topics and methods in the geodynamics community in an understandable manner. We welcome all researchers – PhD students to professors – to introduce their area of expertise in a lighthearted, entertaining manner and touch upon some of the outstanding questions and problems related to their fields. This time, Lars Gebraad, PhD s ...[Read More]

Inversion 101 to 201 – Part 2: The inverse problem and deterministic inversion

Inverse theory exists to make the life of the mantle geodynamicist easier.