With the EGU General Assembly (GA) less than a month away, attendees should start planning their schedules to get the most out of the week. In today’s blog, Geodynamics (GD) Division Early Career Scientist (ECS) representative Garima Shukla highlights the GD Division’s networking events and provides an overview of key events at the GA. Networking Events: Geodynamics Division What: ECS ...[Read More]

Halokinesis: the effect and importance of the most “liquid” rocks in geodynamics

Evaporitic rocks possess unique properties that enable them to form crucial structures for petroleum systems. Salt basins are globally distributed, particularly along the Atlantic margins. Their thermal and mechanical properties can influence the Earth’s crust, altering structural styles and basin architecture, with significant implications for hydrocarbon exploration and geodynamic processes. How ...[Read More]

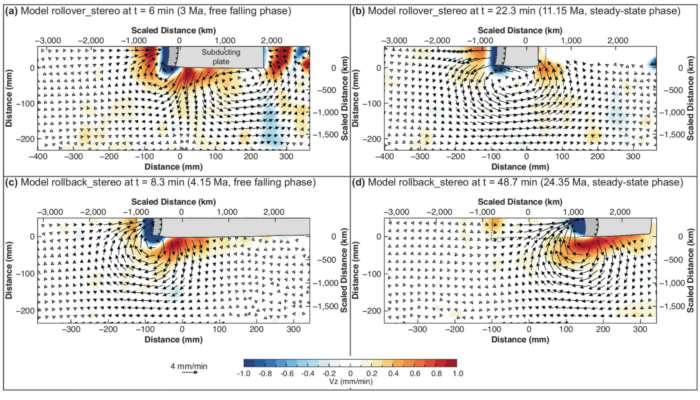

Coexisting Forces in Geodynamic Modelling: Pros, Cons, and Synergies of Analogue and Numerical Modelling

Geodynamic modelling helps us understand Earth’s internal processes by providing a framework to test hypotheses. Analogue modelling uses physical models governed by the laws of nature, with resolution down to Planck’s length. In contrast, numerical modelling employs mathematical methods to approximate solutions to the physical laws governing Earth’s processes. Each modelling approach comes with it ...[Read More]

Ice Ice Baby! Modelling the thermal evolution within the ice shell of Ganymede, Jupiter’s moon.

Ganymede, one of the Galilean moons of Jupiter and the largest in the Solar System, has caught the scientists’ attention due to its potential for hosting life. The JUICE mission, launched from the Guiana Space Centre in French Guiana on 14 April 2023, is on its way to orbit and conduct experiments on the Galilean moons (Ganymede, Europa, and Callisto), with particular emphasis on characterizing G ...[Read More]