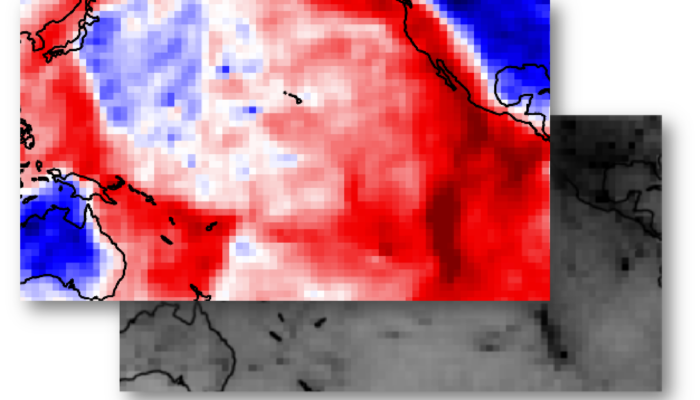

This week we have the second part of Junjie Dong’s insightful blog on modeling the early Earth. Following the discussion (“Modeling the Early Earth: Idealization and its Aims I”) about the major early Earth questions and challenges in modeling early Earth, Junjie now explores the imprtance of modeling as a scientific endeavor. He presents how one could more effectively model the ...[Read More]

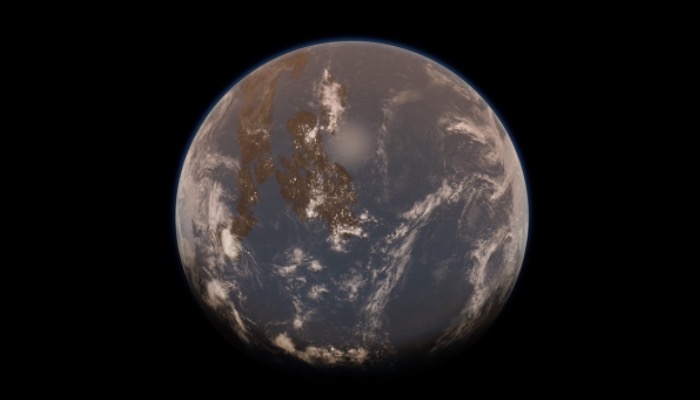

Modeling the Early Earth: Idealization and its Aims II