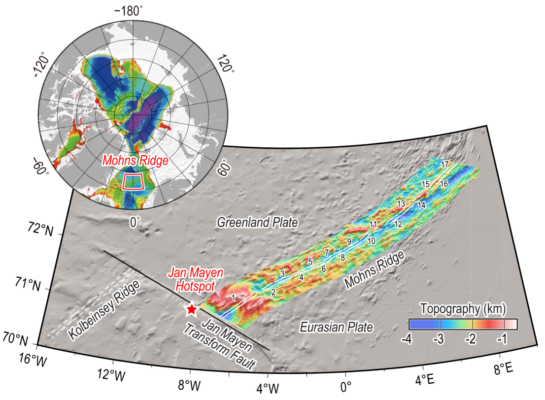

Mid-ocean ridges (MOR) and hotspots are two types of magmatic activity occurring in the ocean. The MORs are typically associated with another tectonic feature—oceanic transform faults. While numerous studies have focused on the interactions within MORs, hotspots, and transform faults, there has been limited research on cases where a hotspot and a transform fault are located at the same end ...[Read More]

What’s the role of hotspot and oceanic transform faults at ultraslow spreading ridge?