Join the EGU GD Blog Team !

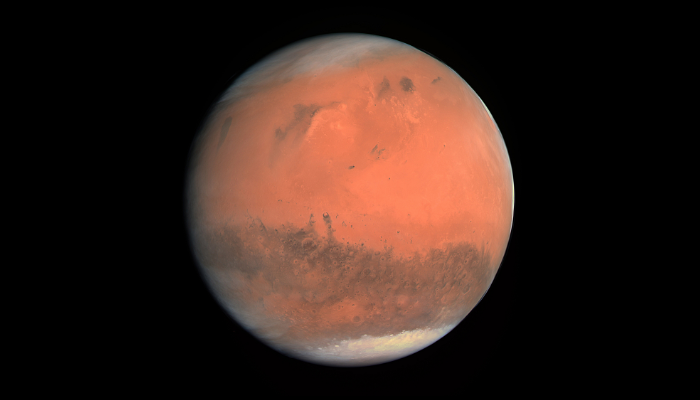

The two faces of Mars

In this week’s blog post, we will learn more about the past of our neighbouring planet Mars. Kar Wai Cheng, PhD student at the Institute of Geophysics at ETH Zurich, is talking about the Martian dichotomy and how it could have formed. Humans have recognized Mars for a very long time. One of the earliest records of Mars is seen on a skymap in the tomb of an ancient Egyptian astronomer. By tha ...[Read More]

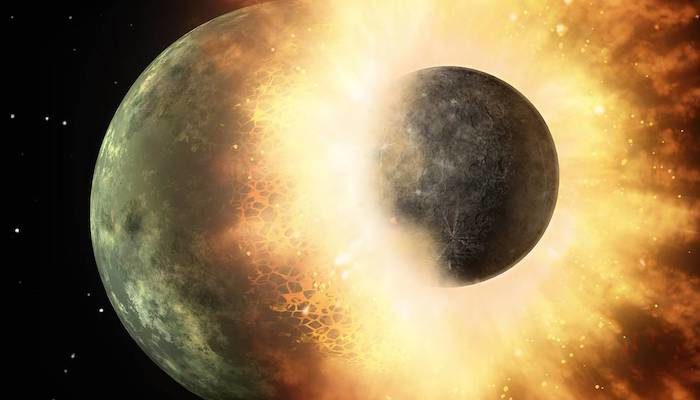

What happens when two worlds collide?

Why does the Moon have a very small core and Mercury one that makes up roughly 85% of the planet’s radius? Why are humans doing research in geoscience and not some evolved version of dinosaurs? In this week’s blog post, Harry Ballantyne, PhD student at the Department of Space and Planetary Sciences at the University of Bern, is talking about large-scale collisions and how they can answ ...[Read More]

Meet the incoming GD President – Jeroen van Hunen

This week on the EGU GD Blog, we interview the newly elected incoming Geodynamics Division President, Jeroen van Hunen (Durham University). Jeroen takes on the role for 2021-2023, from Paul Tackley (ETH Zürich). Jeroen is a Professor within the Department of Geosciences, including the Geodynamics, Geophysics, and The Solid Earth research groups. He is originally from the Netherlands, having studie ...[Read More]