In 2020, we found ourselves fully immersed in the world of virtual seminar series, a trend that has continued since then. By that time, the blog team made a compilation of seminar series of interest to the Geodynamics community. Four years have passed bringing both new additions and some that have become inactive. It’s now time for an update! Here is a non-exhaustive list of recorded seminar serie ...[Read More]

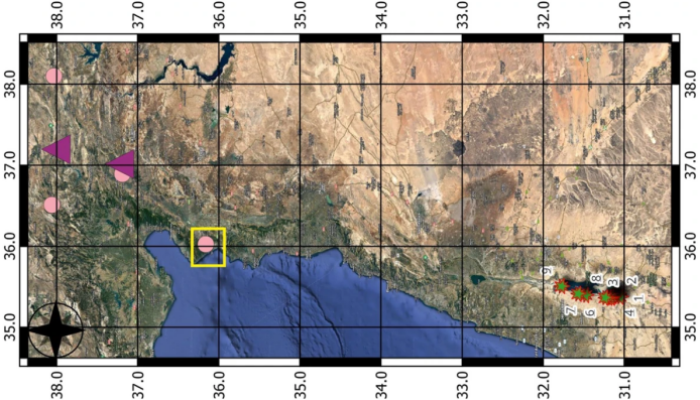

FEMR: An emerging “game changer” in predicting earthquakes and impending geohazards.

In this week’s blog, Shreeja Das, a Post Doctoral researcher at the Sami Shamoon College of Engineering, Ashdod, Israel working with Dr. Vladimir Frid, discusses her research involving the use of FEMR waves and its use as a tool in predicting impending geohazards , some of her results obtained using said technique to study transform fault activity along the Dead Sea Transform fault. Earthqu ...[Read More]

Exploring the Evolution of Rift Magmatism through Numerical Modelling

Continental rifts are a striking manifestation of the forces at work in the Earth’s interior and are often associated with volcanic activity. Contrary to intuition, volcanism is not confined to rift grabens, but migrates as the rifts evolve. How and why this happens is still not clear. This week, Gaetano Ferrante from Rice University, Houston (USA) will share his research with us, showing ho ...[Read More]

The Sassy Scientist – To fly or to couch surf

One thing that the COVID pandemic has left us is the flexibility to attend a conference either in person or virtually. This has been the case for the last three editions of the EGU GA. As the deadline for an expected conference approaches, we face the dilemma of whether to attend it in person or opt for the virtual experience. Louis is asking: Should I fly to attend this conference or should I wat ...[Read More]