A recently published report from the European Commission’s Joint Research Centre has found that our democracy is under pressure from the influence that social media has on our political opinions and our behaviours. What can be done to mitigate this and what could it mean for our democracy, society and scientific community?

The pros and cons of living in an increasingly online world

The COVID-19 pandemic has affected our lives in an unprecedented range of ways. It is a human, economic and social crisis that has potentially changed the way we live, work, and interact with each other forever. It has taken many of our lives in an increasing online direction, both in terms of work and in the way we socialise with our friends and family. The ability to work and socialise online has some clear benefits – it enables us to stay informed, shop without risk of spreading COVID-19, keep-up with friends and family, and greatly reduces the commute to and from work! But there are drawbacks to this increasing online way of interacting.

This month’s GeoPolicy blog features a report that was published by the European Commission’s Joint Research Centre, Technology and Democracy: understanding the influence of online technologies on political behaviour and decision-making. This report highlights four “pressure points” or challenges that emerge with increasing online interactions, particularly those that have limited public oversight or democratic governance. While this report focuses on clear recommendations for European policymakers, particularly in regards to the upcoming European Democracy Action Plan, it also highlights the impact that online technologies could have on our democracy, society and scientific community.

Challenges in the digital world: four key pressure points

- The attention economy

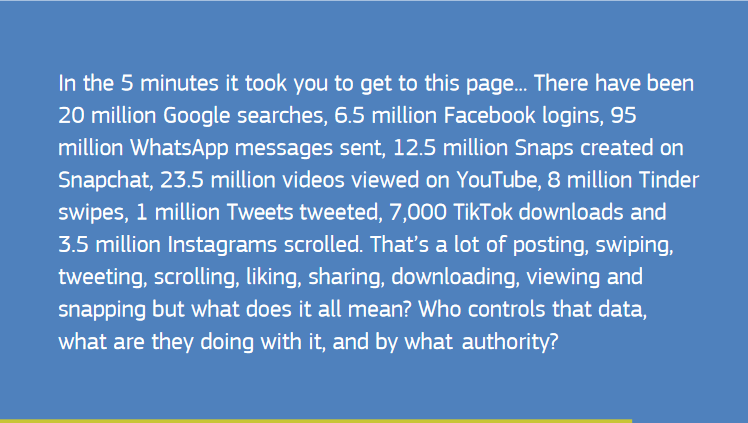

Human attention is a commodity for online platforms who sell our online engagement as products to advertisers. Keeping us engaged online is often key to a platform’s revenue and as a result they have become adept at capturing and keeping our attention, even to the extent that our political views and actions can be shaped by it.

Facebook’s algorithms, for example, can predict a user’s personality with greater accuracy than their own spouse with only 300 likes. This raises concerns about the ability of a platform to microtarget their users as it enables the platform to use highly personalised advertisements based on their users’ personalities. If used politically, microtargeting has the potential to sway a user’s thoughts on partisan issues, potentially undermining democratic discourse. Because only the originator and recipient of a persuasive political message know it exists, it moves democratic debate from a public “free marketplace of ideas” to furtive manipulation as no rebuttal by political opponents is possible.

- Choice architectures

Choice architecture refers to how options are designed and presented to consumers and the impact that this can have on their decision-making. Social media platforms encourage people to constantly engage and often make leaving the platform, or changing settings to enhance privacy, complicated. Online choice architectures can be problematic, particularly when it veers into the direction of coercion or manipulation which may result in the user being compelled or steered toward a specific option.

- Algorithmic content curation

Algorithms touch all aspects of our experience online. Pretty much any information we encounter is curated by algorithms that are designed to maximise the platform’s commercial interests. These algorithms generally lack transparency and accountability. These algorithms can result in increasing polarisation by preventing individuals from receiving certain information. Furthermore, polarising content is likely to have a high level of engagement and is therefore often prioritised by the platform’s algorithm.

From the Technology and Democracy report

- Misinformation and disinformation

The spread of misleading or intentionally false information online is a global issue. According to a survey of EU Member State residents, at least half of respondents came across fake news once a week or more. Online platforms frequently exacerbate the spread of this disinformation as a result of algorithms that promote content with a high level of engagement. This is particularly concerning when the false and misleading information has the potential to set the political agenda or incentivise extremism.

Potential impact on society and the scientific community

These four pressure points and the overarching ability of online platforms to increase polarisation, spread disinformation, and sway views on political issues, is not only a concern for policymakers, but also has the potential to impact society more broadly, including the scientific community. One example of this is the prominence of recent populist politicians who have been able to spread disinformation using online technologies. By using social media, these populist politicians are able to bypass factchecking that might be undertaken by traditional news sources and reach their target audience at incredible speed. For example, before he was banned from the platform, former President Trump frequently used Twitter to deny and spread lies about the science behind climate change.

Populism typically challenges the established political elite and institutionalised structures but also frequently questions the validity of academics, scientific organisations, and experts. One of the tactics commonly used by populists to do this is shock-and-chaos disinformation, a method that spreads doubt about whether the truth exists or even if it can be known at all! While trust in science appears to be at an all-time high, probably because the pandemic underscored the importance of expertise and science to manage a crisis, we cannot become complacent. It is important for us to acknowledge the potential risks and pressures that online technologies have on our democracy and subsequently our specific community, and to mitigate these where possible.

Finding solutions: Q&A with the authors

The EGU has recently interviewed two of the report’s lead authors, Laura Smillie and Stephan Lewandowsky, to get their insights on the outcomes of the report and how scientists can help reduce the spread of misinformation.

If trust in science from the public is increasing (as indicated by both the Wissenschaft im Dialog’s Science Barometer and the Pew Research Center), do scientists really need to be concerned about the influence that social media could have on our democracy?

Stephan Lewandowsky: Chair in Cognitive Psychology, School of Psychological Science

Stephan Lewandowsky: Scientists are traditionally among the most-trusted members of societies, and that trust has increased since the onset of the pandemic. Nonetheless, we should not be complacent. There is clear evidence that social media are a staging ground for attacks on scientists, in particular climate scientists. Like many others working on climate change, I was targeted on social media by people who deny the physics of climate change, and it was important for me to understand what motivated those people. I wrote several articles about this, including some that suggested that the scientific community is not immune to influence through social media.

How can scientists ensure that public trust in science is maintained (or increased)?

Stephan Lewandowsky: Of course scientists have to be trustworthy to be trusted. That means we must be transparent and responsible in how we conduct science, and the vast majority of scientists are. There has been a revolution in transparency and the “open science” movement has (deservedly) taken over much scientific practice, although more remains to be done. However, we must also recognize that some people will oppose science for political or ideological reasons irrespective of how trustworthy scientists are. It is important to recognize this, to avoid yielding too much ground to those bad-faith actors because it achieves nothing and may in fact be damaging to science.

Your report outlined a number of policy recommendations (such as banning microtargeting for political advertisements) that will hopefully minimise the impact of the four pressure points. Is there anything that scientists can do to support these recommendations or to minimise the spread of disinformation on the topic that they are researching?

Laura Smillie: Policy Analyst,

European Commission

Laura Smillie: As the report outlines, the current environment calls for many actions across many players, no one scientist can be expected to carry the burden of this responsibility. At the EU level there are a number of policies and legislative measures currently in development to help address many of the issues identified in the report. At the individual level, Steve and co-author John Cook have produced the excellent Conspiracy Theory Handbook. This is a valuable resource for scientists, particularly climate scientists not only confronted with mis/disinformation but also conspiracy theories.

A big thank you to two of the reports lead authors, Laura Smillie and Stephan Lewandowsky for taking the time to answer our questions!

For more information on this topic, see the full report:Technology and Democracy: understanding the influence of online technologies on political behaviour and decision-making.