Around the world, the most vulnerable, marginalized, and racialized are disproportionately impacted by a variety of environmental stressors such as extreme heat, health-harming air pollution, and the growing impacts of climate change. In the United States, research has uncovered how Black and Brown communities and those with lower socioeconomic status are exposed to higher concentrations of many a ...[Read More]

Parenting in Academia: Challenges and Perspectives

Trying to juggle teaching, advising, publishing, finding a new (or permanent) job, relocating, attending conferences, and actually doing research sometimes requires more hours in the day than exist (oh and that global pandemic situation is sticking around). Additionally, many scientists have children or are starting a family at the same time as maintaining and building a career. In this week’s blo ...[Read More]

February 2021: A dusty month for Europe

In February 2021, two major Saharan dust events hit Europe. Because of the prevailing weather conditions in the first and last week of February, several million tons of Saharan dust blanketed the skies from the Mediterranean Sea all the way to Scandinavia. The sandy sky was observed almost everywhere in Europe (Fig. 1). Moreover, the stained cars and windows indicated the dust deposition (Fig. 2 – ...[Read More]

EGU’s Climate: Past, Present & Future and Atmospheric Sciences Divisions welcome the US back into the Paris Climate Agreement

As of 19 February 2021, the US officially re-joined the Paris Climate Agreement, a landmark international accord to limit global warming by 2°C (and ideally to 1.5°C) compared to pre-industrial levels. The Paris Climate Agreement aims to bring the world together to avoid catastrophic warming that will impact us all and to build resilience to the consequences of climate change that we are already s ...[Read More]

Using cloud microphysics to predict thunderstorms: How modelling of atmospheric electricity could save lives

The last three decades were the warmest in the history of meteorological observations in Europe. Temperature rise is accompanied by an increase in the frequency and magnitude of extreme weather and climatic events, which are the main risks for population and environment associated with modern climate change. An important class of such phenomena includes severe rainfall, tornadoes, squalls, and thu ...[Read More]

The acidity of atmospheric particles and clouds

Many of us learned about acidity, or pH, in high school chemistry. We learned that acids like HCl could dissociate into H+ and Cl- and the activity of those H+ ions defined the acidity. In the atmosphere, the same basic definition of acidity, or pH on the molality scale, applies to aqueous phases like suspended particles and cloud droplets. Atmospheric acidity regulates what kinetic reactions are ...[Read More]

Community Effort to explore the Papers that shaped Tropospheric Chemistry

The genesis of the idea to explore the influence of certain papers on shaping the field of tropospheric chemistry came when editing a textbook chapter I had written a decade earlier. As I edited it I thought, what really is new; text-book worthy over the last 10 years? In some senses what is text-book worthy at all? These type of questions inspired me to think about where atmospheric chemistry has ...[Read More]

Atmospheric research in the middle of the Amazon forest: The Amazon Tall Tower Observatory celebrates its anniversary

It looks like a spike, orange against the blue sky, sticking out the green ocean of the Amazon forest: Standing 325 m tall, the Amazon Tall Tower Observatory (ATTO) is the highest construction in South America. This tower celebrates its 5th anniversary this year, while the ATTO research site, located ~150 km northeast of Manaus, Brazil, has been in operation for 10 years. During the past 5 years, ...[Read More]

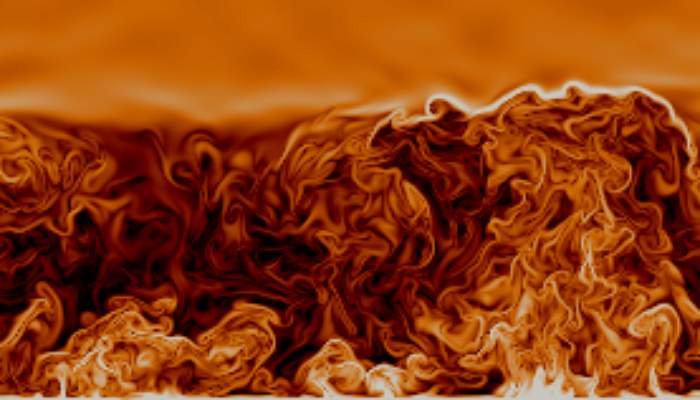

A simple model of convection to study the atmospheric surface layer

Since being immortalised in Hollywood film, “the butterfly effect” has become a commonplace concept, despite its obscure origins. Its name derives from an object known as the Lorenz attractor, which has the form of a pair of butterfly wings (Fig. 1). It is a portrait of chaos, the underlying principle hindering long-term weather prediction: just a small change in initial conditions leads to vastly ...[Read More]

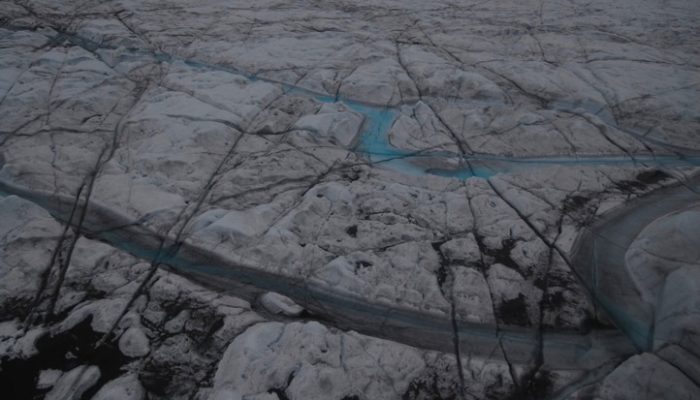

A brighter future for the Arctic

This is a follow-up from a previous publication. Recently, a new analysis of the impact of Black Carbon in the Arctic was conducted within a European Union Action. “Difficulty in evaluating, or even discerning, a particular landscape is related to the distance a culture has traveled from its own ancestral landscape. As temperate-zone people, we have long been ill-disposed toward deserts and ...[Read More]