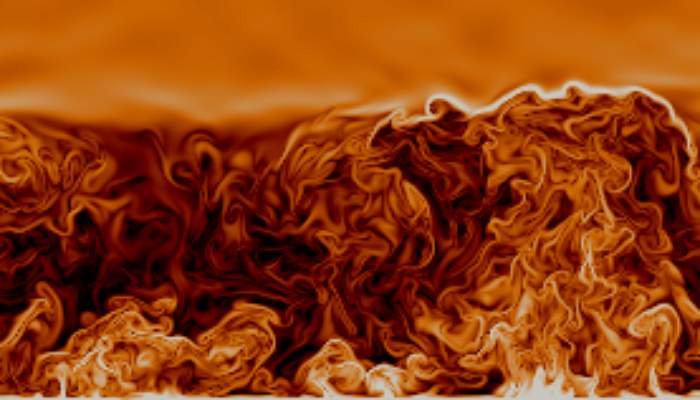

Since being immortalised in Hollywood film, “the butterfly effect” has become a commonplace concept, despite its obscure origins. Its name derives from an object known as the Lorenz attractor, which has the form of a pair of butterfly wings (Fig. 1). It is a portrait of chaos, the underlying principle hindering long-term weather prediction: just a small change in initial conditions leads to vastly ...[Read More]

A brighter future for the Arctic

This is a follow-up from a previous publication. Recently, a new analysis of the impact of Black Carbon in the Arctic was conducted within a European Union Action. “Difficulty in evaluating, or even discerning, a particular landscape is related to the distance a culture has traveled from its own ancestral landscape. As temperate-zone people, we have long been ill-disposed toward deserts and ...[Read More]

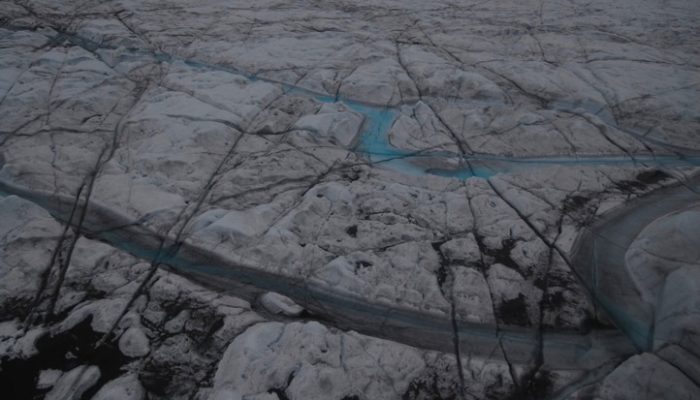

The puzzle of high Arctic aerosols

Current Position: 86°24’ N, 13°29’E (17th September 2018) The Arctic Ocean 2018 Expedition drifted for 33 days in the high Arctic and is now heading back south to Tromsø, Norway. With continuous aerosol observations, we hope to be able to add new pieces to the high Arctic aerosol puzzle to create a more complete picture that can help us to improve our understanding of the surface energy budget in ...[Read More]

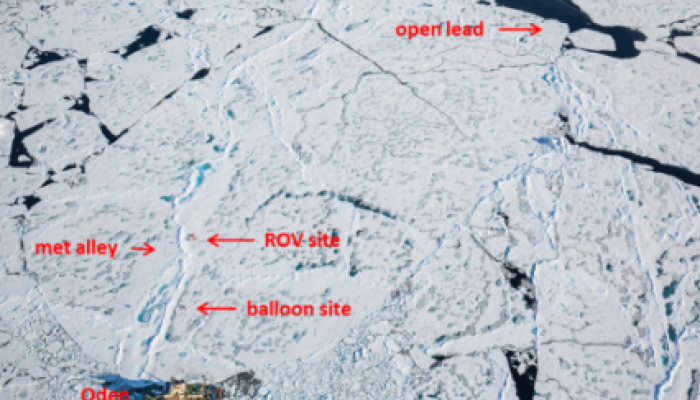

The perfect ice floe

Current position: 89°31.85 N, 62°0.45 E, drifting with a multi-year ice floe (24th August 2018) With a little more than three weeks into the Arctic Ocean 2018 Expedition, the team has found the right ice floe and settled down to routine operations. Finding the perfect ice floe for an interdisciplinary science cruise is not an easy task. The Arctic Ocean 2018 Expedition aims to understand the linka ...[Read More]

How can we use meteorological models to improve building energy simulations?

Climate change is calling for various and multiple approaches in the adaptation of cities and mitigation of the coming changes. Because buildings (residential and commercial) are responsible of about 40% of energy consumption, it is necessary to build more energy efficient ones, to decrease their contribution to greenhouse gas emissions. But what is the relation with the atmosphere. It is two fold ...[Read More]

Do you want to establish a career in the atmospheric sciences? Interview with the Presidents of the AMS and the EGU-AS Division.

Establishing a career in the atmospheric sciences can be challenging. There are many paths to take and open questions. Fortunately, those paths and questions have been thoroughly explored by members of our community and their experiences can provide guidance. In light of this, in September 2016 Ali Hoshyaripour [Early Career Scientists (ECS) representative of the European Geoscience Union’s Atmosp ...[Read More]

When cooling causes heating

Following the Montreal Protocol in the late 1980s, CFC (chlorofluorocarbon) were replaced by hydrofluorocarbons (HFC) as a refrigerant. Unfortunately, the HFC’s have a global warming potential (GWP) far greater than the well-known greenhouse gas (GHG), carbon dioxide. Apart from the fact that this was not kn ...[Read More]

Why should we care about a building’s energy consumption?

From the 9th to the 11th of September the Solar Energy and Buildings Physics laboratory is hosting the CISBAT conference. This international meeting is seen as a leading platform for interdisciplinary dialog in the field of sustainability in the built environment. More than 250 scientists and people from the industry will be at EPFL in Lausanne to talk about topics from solar nanotechnologies to t ...[Read More]

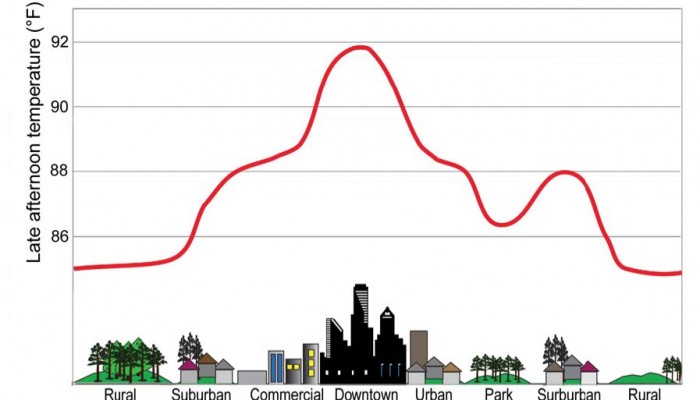

Urban Climate

The 9th International Conference on Urban Climate and the 12th Urban Environment Symposium are taking place this week in the “Pink City” Toulouse. With the 21st Conference of Parties (COP21) which will be held in December in Paris, the obvious focus topic for the urban climate conference is the mitigation and adaptation to climate change in urban environment. But, first of all, why should we even ...[Read More]

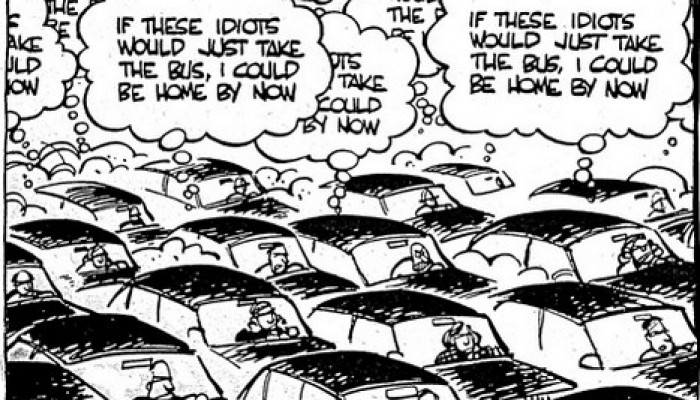

An unlikely choice between a gasoline or diesel car…

I have recently been confronted with the choice of buying a “new” car and this has proved to be a very tedious task with all the diversity of car that exists on the market today. However, one of my primary concerns was, of course, to find the least polluting car based on my usage (roughly 15000km/year). Cars (or I should say motor vehicles) pollution is one of the major sources of air pollution (p ...[Read More]