As of 19 February 2021, the US officially re-joined the Paris Climate Agreement, a landmark international accord to limit global warming by 2°C (and ideally to 1.5°C) compared to pre-industrial levels. The Paris Climate Agreement aims to bring the world together to avoid catastrophic warming that will impact us all and to build resilience to the consequences of climate change that we are already s ...[Read More]

Atmospheric research in the middle of the Amazon forest: The Amazon Tall Tower Observatory celebrates its anniversary

It looks like a spike, orange against the blue sky, sticking out the green ocean of the Amazon forest: Standing 325 m tall, the Amazon Tall Tower Observatory (ATTO) is the highest construction in South America. This tower celebrates its 5th anniversary this year, while the ATTO research site, located ~150 km northeast of Manaus, Brazil, has been in operation for 10 years. During the past 5 years, ...[Read More]

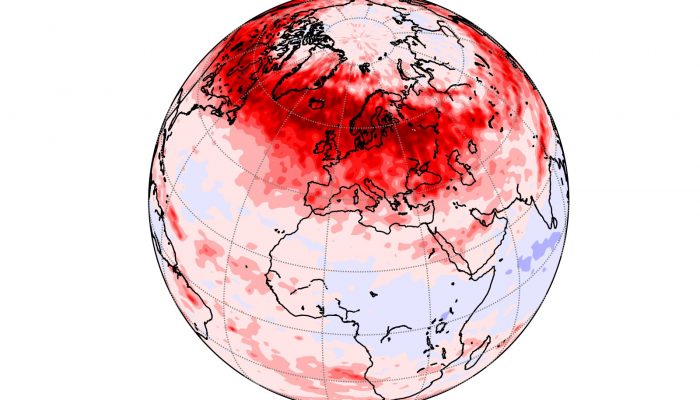

A brighter future for the Arctic

This is a follow-up from a previous publication. Recently, a new analysis of the impact of Black Carbon in the Arctic was conducted within a European Union Action. “Difficulty in evaluating, or even discerning, a particular landscape is related to the distance a culture has traveled from its own ancestral landscape. As temperate-zone people, we have long been ill-disposed toward deserts and ...[Read More]

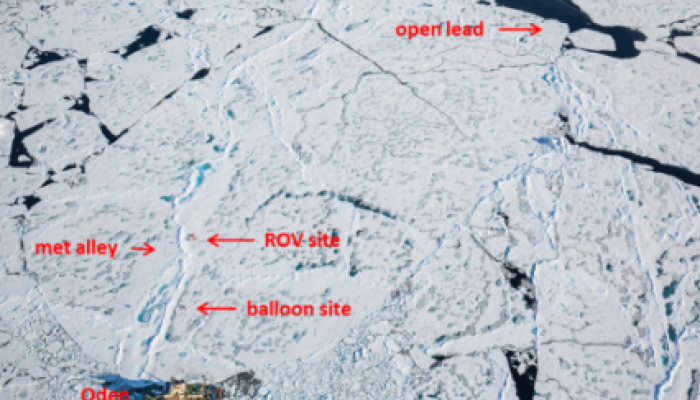

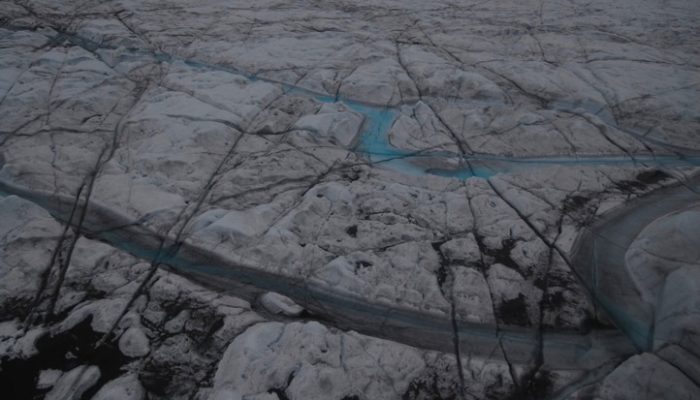

The perfect ice floe

Current position: 89°31.85 N, 62°0.45 E, drifting with a multi-year ice floe (24th August 2018) With a little more than three weeks into the Arctic Ocean 2018 Expedition, the team has found the right ice floe and settled down to routine operations. Finding the perfect ice floe for an interdisciplinary science cruise is not an easy task. The Arctic Ocean 2018 Expedition aims to understand the linka ...[Read More]

Buckle up! Its about to get bumpy on the plane.

Clear-Air Turbulence (CAT) is a major hazard to the aviation industry. If you have ever been on a plane you have probably heard the pilots warn that clear-air turbulence could occur at any time so always wear your seatbelt. Most people will have experienced it for themselves and wanted to grip their seat. However, severe turbulence capable of causing serious passengers injuries is rare. It is defi ...[Read More]

How can we use meteorological models to improve building energy simulations?

Climate change is calling for various and multiple approaches in the adaptation of cities and mitigation of the coming changes. Because buildings (residential and commercial) are responsible of about 40% of energy consumption, it is necessary to build more energy efficient ones, to decrease their contribution to greenhouse gas emissions. But what is the relation with the atmosphere. It is two fold ...[Read More]

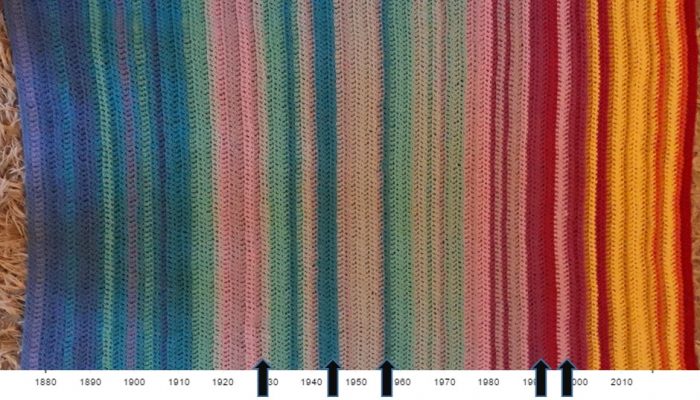

The art of turning climate change science to a crochet blanket

We welcome a new guest post from Prof. Ellie Highwood on why she made a global warming blanket and how you could the same! What do you get when you cross crochet and climate science? A lot of attention on Twitter. At the weekend I like to crochet. Last weekend I finished my latest project and posted the picture on Twitter. And then had to turn the notifications off because it all went a bit noisy. ...[Read More]

Science Communication – Brexit, Climate Change and the Bluedot Festival

Earlier this summer journalists, broadcasters, writers and scientists gathered in Manchester, UK for the Third European Conference of Science Journalists (ECSJ) arranged by two prestigious organisations. Firstly, the Association of British Science Writers (ABSW) who provides support to those who write about science and technology in the UK through debates, events and awards. Secondly the European ...[Read More]

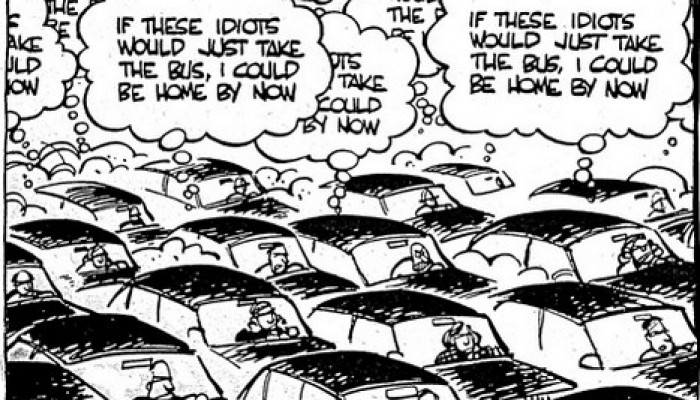

An unlikely choice between a gasoline or diesel car…

I have recently been confronted with the choice of buying a “new” car and this has proved to be a very tedious task with all the diversity of car that exists on the market today. However, one of my primary concerns was, of course, to find the least polluting car based on my usage (roughly 15000km/year). Cars (or I should say motor vehicles) pollution is one of the major sources of air pollution (p ...[Read More]