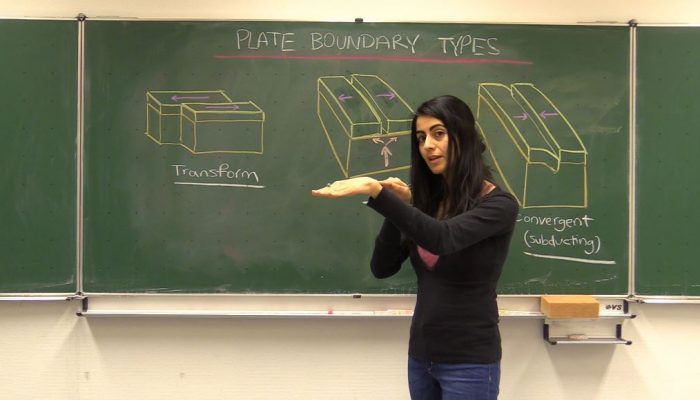

A long time ago, when I walked into the Ministry of Education building in Dushanbe (Tajikistan) to inquire about offering an earthquake education workshop in a public school, I had no research agenda, let alone thinking about publishing it one day. All I wanted was to do something useful: sharing earthquake science with school children. Recognizing the value of geoscience communication, my graduat ...[Read More]

How to make your geoscience communication publishable: Find out at EGU25