Ever wonder what happens to the chemicals and medications we use once they go down the drain? The fourth Sunday of September each year marks the World Rivers Day, and this post is dedicated to our global rivers and what humankind can do to preserve our waters. This is because pharmaceuticals and household products, even after being flushed or washed away, don’t just disappear. Many of these substa ...[Read More]

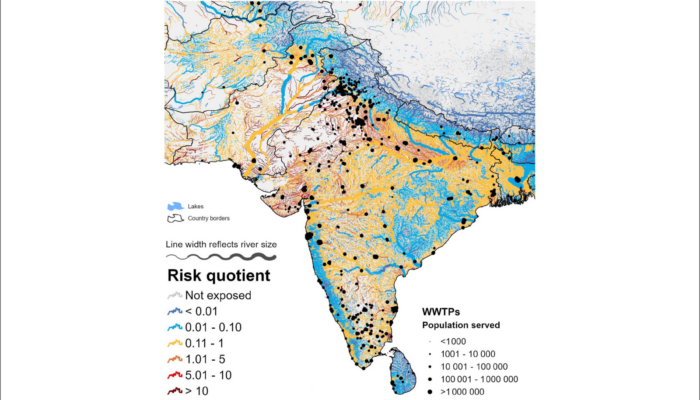

Here is how HydroFATE, a new high-resolution model, is predicting contaminant hotspots in global waterways