Paleoecological use of land snail shells is no longer a new field of science. They are studied by malacologists and palaeontologists who specialise in the study of molluscs. During the last glaciation, loess, a light yellow, fine-grained sediment, was deposited over large areas, mainly in the periglacial regions of Eurasia and North America. In addition to its many advantages, it has also provided ...[Read More]

From mountains to caves: How I found my research niche

What makes you unique as a scientist? Most of us are confronted with this question sooner or later in our career, for example when applying for jobs or research grants. The answer is not always easy, especially for Early Career Researchers (ECRs) still developing a strong scientific profile. For me, being able to clearly identify my research niche has been a long process that involved developing i ...[Read More]

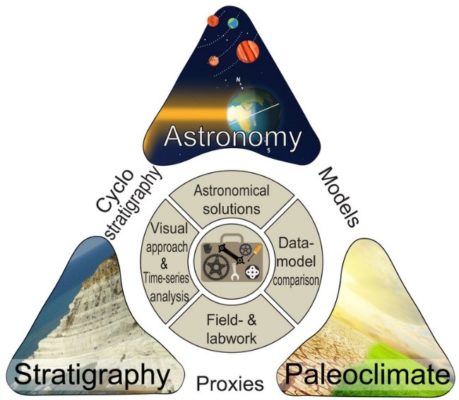

An online learning platform for cyclostratigraphy – www.cyclostratigraphy.org

Cyclostratigraphers aim to read and understand the effect of climate-driven orbital changes in the geological record through time. In doing so, they start from an important prerequisite: An imprint of insolation variations caused by Earth’s orbital eccentricity, obliquity and/or precession (Milankovitch forcing) can be preserved in the geological rock record. The new www.cyclostratigraphy.org webs ...[Read More]

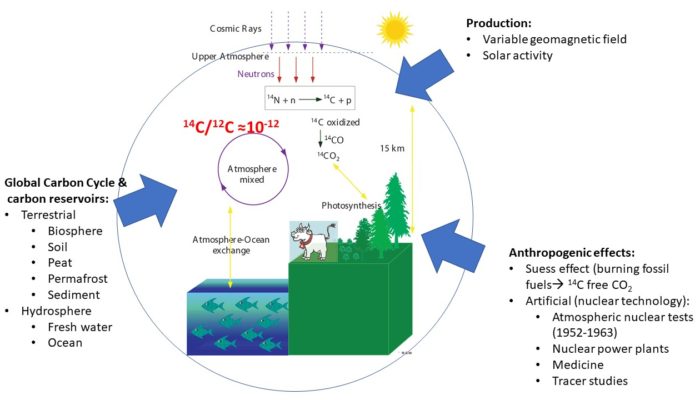

Bomb 14C – a tracer and time marker of the mid-20th century

1950 CE is known as the year zero in the so-called ‘BP time scale.’ The BP stands for, “Before Present,” and has its roots in the development and conventions of radiocarbon dating [1]. The radioactive isotope of carbon (14C) with a half-life of 5700 years [2; 3] is a cosmogenic isotope produced in the atmosphere by secondary cosmic rays (SCR). Thermal neutrons, the SCR particle responsible for the ...[Read More]