For the Accretionary Wedge blog festival with the theme of ‘Momentous Discoveries in Geology’, Marion Ferrat discusses how a pioneering lady discovered what lies deepest inside our planet.

We know a lot about our planet today: its position in the solar system, its age, its composition and its internal workings and structure. Many laborious experiments, observations and hypotheses have helped scientists piece together its mysteries bit by bit.

Mercury, Venus, Earth and Mars – Source: NASA, Wikimedia Commons.

One branch of Earth Science in particular has revolutionised geoscientists’ understanding of the interior of the Earth: that branch is seismology.

Seismology is the study of seismic waves. In other words, the study of the energy released by earthquakes. Once released, this energy travels in all directions, moving from the ‘source’ point (this can be a natural earthquake or a man-made detonation), through the interior of the Earth, and back up to the surface again.

Seismology is useful because the seismic waves travel differently, and at different speeds, depending on the material they travel through. When a wave reaches a boundary between two different materials or layers within the Earth, it will be deflected: it can either be transmitted to the layer below (but in a slightly different direction), it can travel along the boundary itself, or it can be reflected back to the surface. When a wave passes through the boundary and into the next layer, the amount and direction of the deflection will depend on whether the material below is more or less dense than that above.

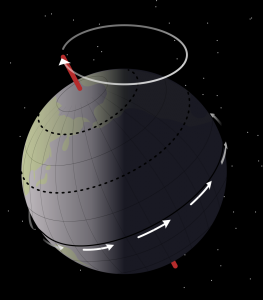

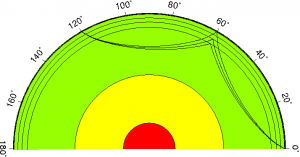

Seismic waves travelling through a layer of the Earth – Source: Julia Schäfer, Wikimedia Commons.

These multitude of possible pathways mean that, by looking at how and where on Earth a seismic wave arrives back at the surface, scientists can take a good guess as to what it has travelled through. By building up this information for more and more waves, they can start to paint a good picture of what is going on beneath our feet. Studying seismic waves for geoscientists is a little bit like carrying out a CAT scan for doctors: it allows them to scan the interior of something they cannot see from the outside.

Seeing to the centre of the planet

For my ‘Momentous Discovery in Geology’, I chose to look at a huge moment in the history of seismology: the discovery of the Earth’s inner core. And along with a momentous discovery, comes a momentous discoverer: Danish seismologist Inge Lehmann.

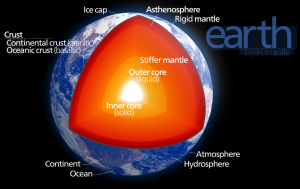

Internal structure of the Earth – Source: Kelvinsong, Wikimedia Commons.

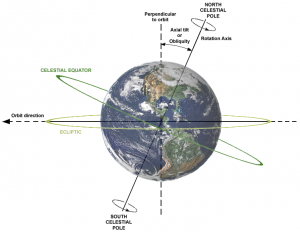

The Earth is a little bit like an onion, in that it has layers. The outermost layer, which we live on, is called the crust. It can be as thin as a 10 kilometres under the oceans and as thick as 70 kilometres under large mountains such as the Himalayas.

Below the crust is the mantle, which makes up over 80% of our planet’s volume. The mantle is mainly solid but can behave in a viscous way when deformed very slowly, over geological timescales.

At the centre of the Earth lies the dense, metallic core. It is predominantly made of iron and nickel. The outer part of the core is liquid and plays an important role in influencing the Earth’s magnetic field.

The core lives nearly 3000 km beneath the surface and has a temperature of nearly 6000°C. It is too deep, too hot and too far to explore with any kind of instrument. This is where seismology steps in.

A liquid ball of molten metal?

Towards the beginning of the 20th century, seismologists realised that the core must be liquid, thanks to the precious seismic waves they were observing.

When an earthquake occurs, energy is released in the form of two distinct types of seismic waves. Surface waves travel, as their name suggests, along the surface of the planet. These are the waves that cause the damage to human life and infrastructure. Body waves, on the contrary, travel inside the Earth and get deflected by the different layers they travel through, depending on whether each layer is more or less dense than its predecessor.

P- and S-waves travelling through a medium – Source: Actualist, Wikimedia Commons.

Body waves can be further split into two types, distinguishable by the way in which they displace the medium they travel through: Primary waves, or P-waves, and Secondary waves, or S-waves.

These two wave types travel differently through the Earth. One of the important characteristics of S-waves is that they cannot travel through liquid. P-waves can do but slow down considerably when not travelling through solid material.

These properties are what alerted scientists that there was something molten down in the centre of the Earth:

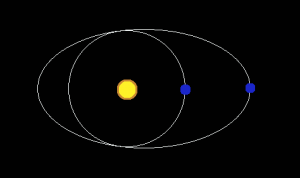

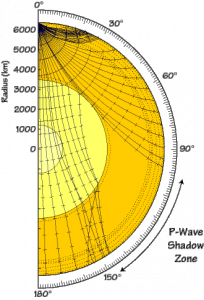

When seismic waves are released from an earthquake, they travel in all directions and should therefore be able to reach back to the surface all around the planet. However, seismologists noticed that seismic waves generated by an earthquake somewhere on the surface of the planet were not being observed at every seismometer on the surface. This no-wave zone is what is called the P- or S-wave shadow zone, where no arrivals can be recorded for a given earthquake.

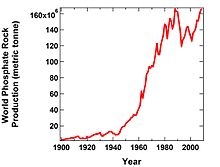

Paths of P-waves through the Earth’s core: the liquid outer core causes a shadow zone – Source: USGS, Wikimedia Commons.

The presence of this shadow zone meant that our P- and S-waves must be affected by something liquid, deep inside the Earth. And so arose the hypothesis of a liquid core.

Something more to the story

In 1929, a large earthquake occurred near New Zealand. Seismologists were quick to study the seismic wave arrivals at seismic stations around the world but Inge Lehmann studied them a little more closely than her peers.

She was puzzled by what she saw: seismometers located within the P-wave shadow zone of the earthquake, where no arrivals should be recorded, were showing signs of the earthquake’s waves. If the core was one large ball of liquid material, this should not be possible.

Lehmann suggested that these waves had travelled some distance inside the liquid core before bouncing off some other, previously unknown, boundary. This bouncing deflected the waves in another direction and meant that they found themselves arriving within the shadow zone.

This hypothesis was the basis of careful studying by Inge Lehmann of more seismic arrivals around the world and she eventually published her results in her revolutionary 1936 paper P’ (or P-prime). Today, the boundary between the outer and inner core is commonly known as the ‘Lehmann discontinuity’.

Inge Lehmann’s theory was later confirmed with the development of more sensitive instruments.

Lehmann was a pioneer in the world of seismology and among women scientists, establishing a new theory about the Earth in a very much male-dominated world.

In 1971, the American Geophysical Union awarded her the William Bowie medal, its highest honour. Inge Lehmann went on to live to the age of 105 and published her last paper in 1987, at the age of 99.

A momentous discoverer and scientist indeed.